Oct 8, 2024

Discover Valo’s secure AI approach in their AI agent for Salesforce administration.

Introduction

In this post, the Valo Labs team explores the importance of a secure AI agent for Salesforce administrators, highlighting how Valo’s approach helps automate Salesforce management tasks while prioritizing security.

Securing Generative AI Systems

As generative AI systems (GenAI) continue to expand their influence across various industries, ensuring their security becomes an essential focus in today's technological landscape. While the adoption of AI introduces new challenges, it also presents opportunities to enhance our security practices and build more resilient systems.

A critical concern is the need to vet the models themselves for potential backdoors and risks from third or fourth parties. Moreover, inadequate input validation can lead to the exposure of sensitive training or contextual data, and can potentially result in the generation of malicious commands that affect downstream processing automation.

While the AI stack is complex, with various potential risk points, understanding these challenges allows us to develop and implement robust security measures. With a thoughtful approach and continuous improvements, we can effectively manage these risks and harness the power of AI in a secure and responsible manner.

AI Security Challenges

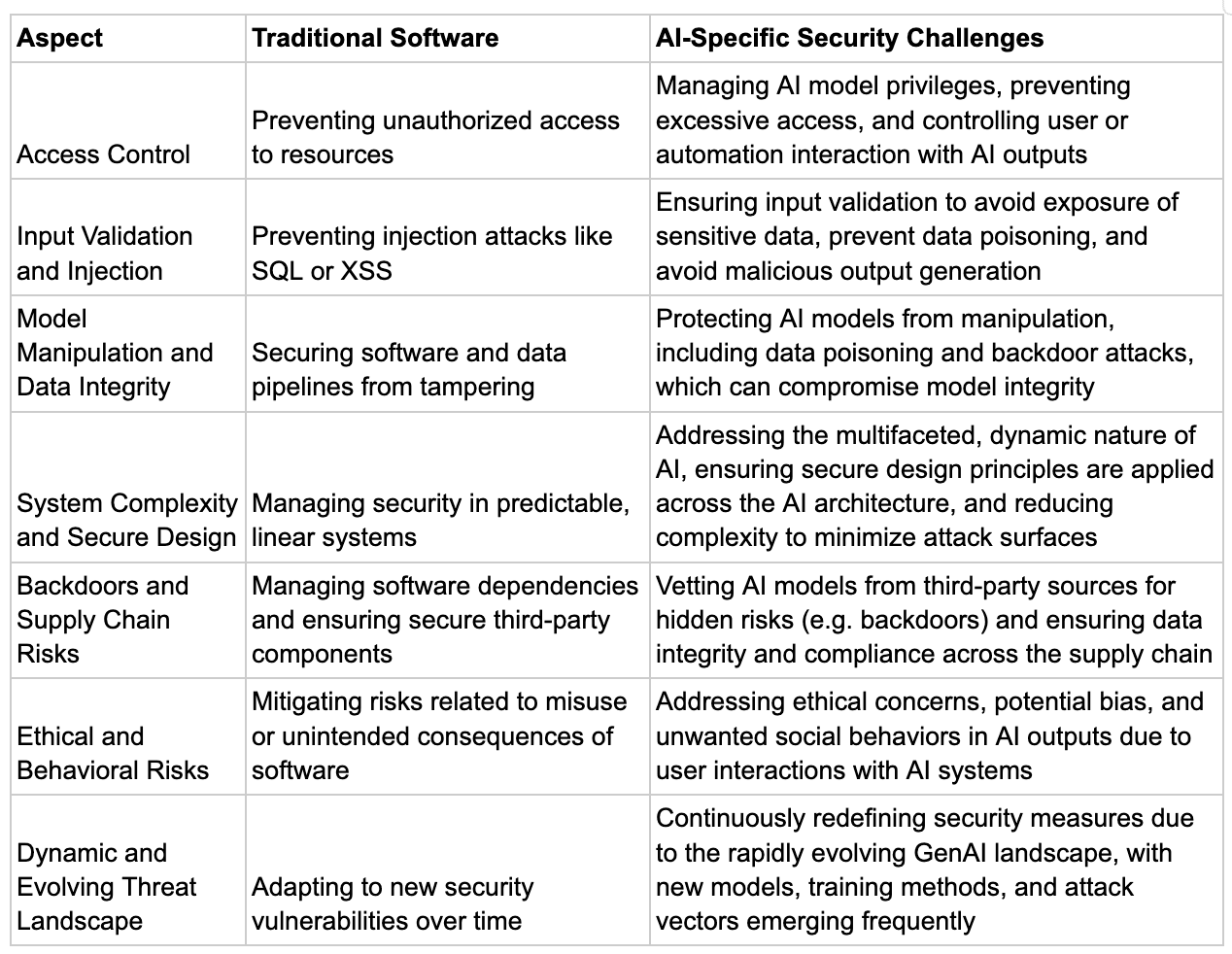

The security challenges in AI systems share similarities with traditional software vulnerabilities but often present unique complexities due to the nature of AI models and their integration into various systems.

While traditional software security focuses on code-level vulnerabilities, access control, and data protection, AI security must also consider the integrity of training data, the potential for model manipulation (data poisoning), and the unpredictable nature of AI outputs.

Allowing users to interact with AI directly also opens concerns for ethical issues and unwanted social behavior risks. Both domains face challenges in supply chain security, input validation, and the potential for unintended system behaviors, while AI systems also introduce additional layers of complexity in terms of interpretability, data exposure risks, and the potential for more sophisticated and harder-to-detect manipulations.

Table: Comparison of traditional and AI-specific security challenges.

Reference here.

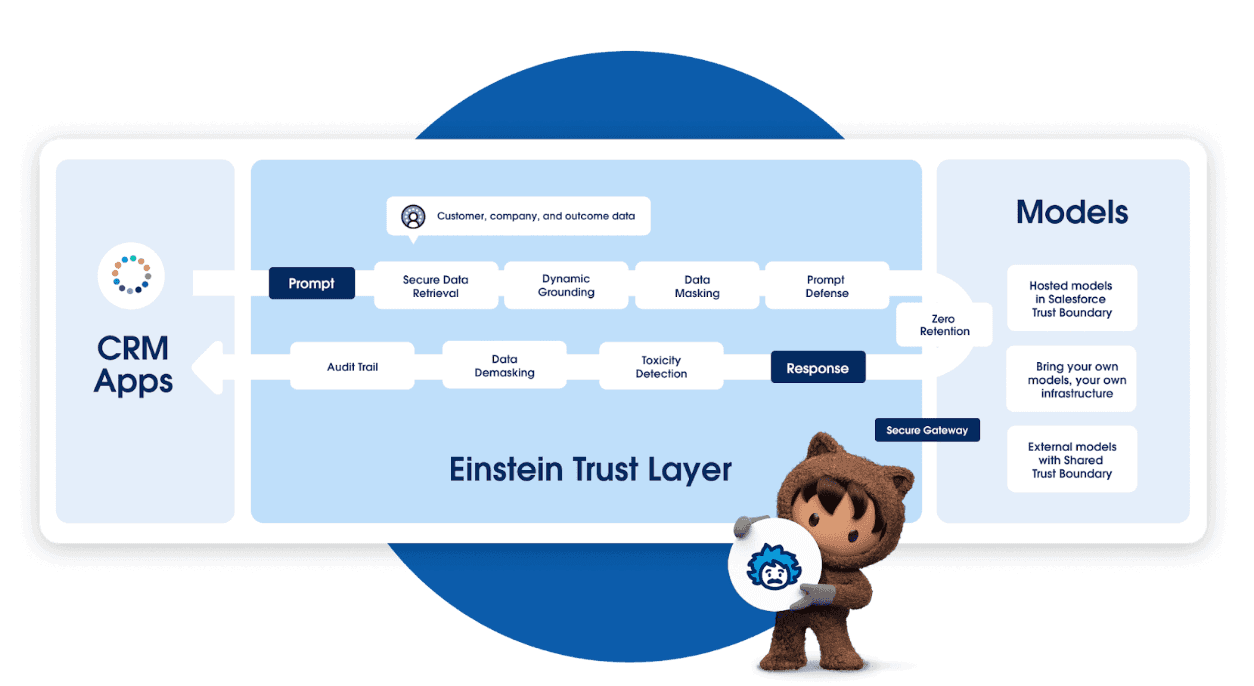

Salesforce's Current Approach to AI Security

While the challenges in AI security are significant, implementing GenAI securely and at scale requires a more nuanced approach than viewing it as a monolithic system of input and output with some validation in place. For instance, Salesforce's AI platform, Einstein 1, demonstrates a sophisticated architecture integrated into its ecosystem. It employs multiple AI capabilities, including predictive, generative, and conversational AI, all grounded in the customer's own data. The system implements secure data retrieval, dynamic grounding, and data masking, which limits AI access to only contextually relevant and appropriately protected data. Features like prompt defense, zero retention, and toxicity detection further enhance security. The platform supports various deployment options, including hosted, customer-owned, and external models within a trust boundary, allowing for customization while maintaining security. This multi-layered approach significantly mitigates risks related to data access and output generation, illustrating how thoughtful system design can address many AI security concerns while enabling context-aware AI assistance across different business functions.

Link to video explaining Salesforce Einstein Trust Layer here.

Learning from Salesforce’s own platform, we can see that building a secure SaaS platform that utilizes GenAI requires a granular, multi-layered architecture that controls and constrains both inputs and outputs through multiple specialized models. This approach can ensure overall system security even when individual AI models have inherent vulnerabilities.

Practical Security Measures

Unlike traditional SQL injection or input validation concerns, GenAI models primarily process data without inherent command execution capabilities. Having separate parameterized action execution components downstream from AI models allows action inputs to have very strict validation and a separate consent engine. In other words, the actions should be vetted and secured similarly independently whether they are called from an AI component or directly by the end user. Thus, the main risk lies in the potential exposure of training data or other restricted information through model outputs, rather than the execution of malicious commands.

Practically speaking, security can be achieved through:

Access Control and Authorization: Implementing robust authentication and authorization mechanisms ensures that only authorized users and systems can access or interact with AI models. It's essential that the data used to train the AI models is authorized for viewing by the authenticated user, whether accessed directly or via the AI model. Similarly, the outputs generated by the AI should be subject to the same authorization checks as if the user were performing the action themselves, ensuring that sensitive information is not exposed or misused.

Output Control: Limiting model outputs to predefined formats or ranges (e.g., binary yes/no responses) ensures data validity. For automated actions downstream there should be separately secured and vetted primitives with strict input parameters controls.

Output Validation: Employing acceptance validation models to screen outputs before delivery to users, including toxicity and intent checks.

Input Validation: While controlling inputs enhances security, it's crucial to consider non-obvious inputs that might influence outcomes. Traditional and AI specific input validation techniques should be applied to all data fields and training data used in generating outputs.

Modular Architecture: Splitting functionality across multiple models, each responsible for specific tasks or output sections, reduces the attack surface.

Dynamic Data Grounding: Ensuring AI responses are based on real-time, contextually relevant data improves accuracy and security.

Data Protection: Implementing data masking and minimal privileged access techniques protects sensitive information during processing (and training).

Prompt Defense: Developing mechanisms to prevent prompt injection attacks in both user inputs and internal data used for summarization.

Audit Trails: Maintaining comprehensive logs of AI actions for monitoring and review.

While combining these approaches significantly mitigates risks, it's important to note that some potential for attacker maneuvering remains, especially in systems providing rich, context-aware outputs. Continuous monitoring, regular security audits, and ongoing refinement of the architecture are essential to maintain a robust security posture in GenAI-powered SaaS platforms.

The Valo Salesforce AI Admin Agent Solution

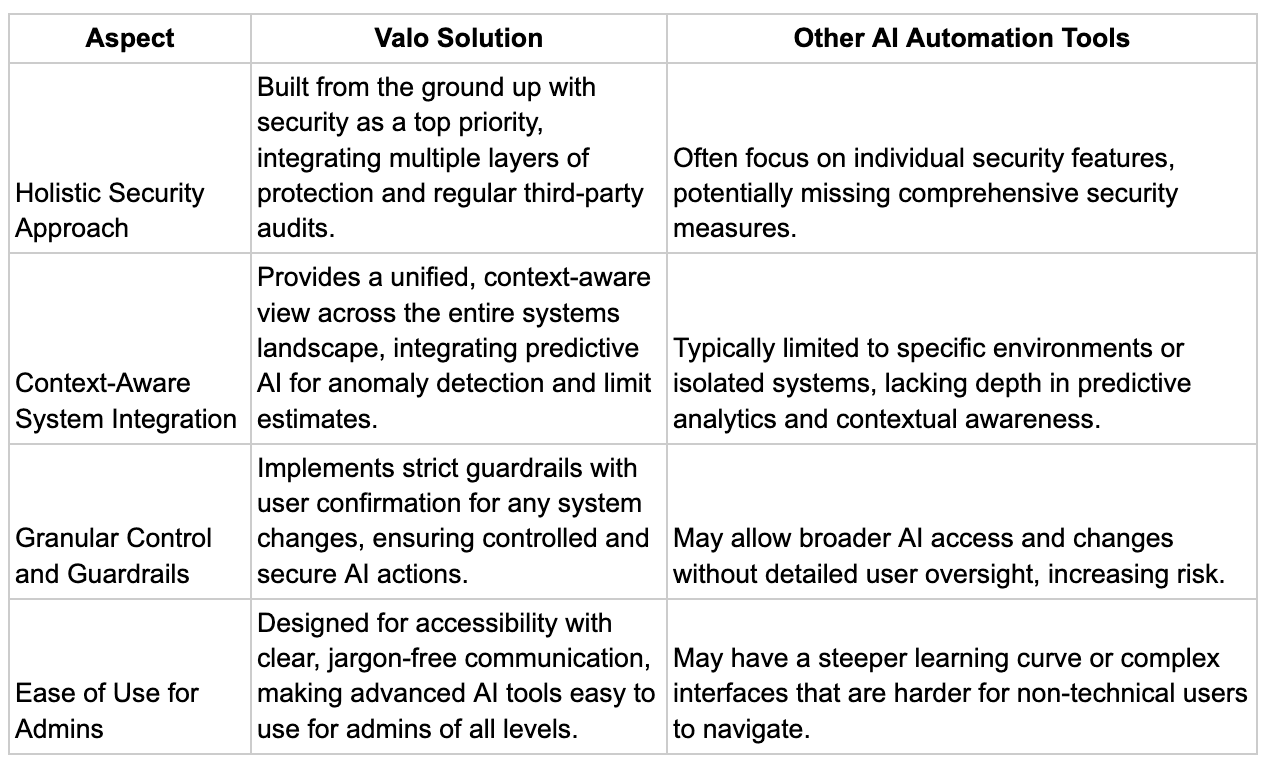

At Valo, we're revolutionizing Salesforce management automation by empowering admins with AI-driven superpowers. Our solution seamlessly integrates various AI technologies, including GenAI and predictive AI, including anomaly detection and limit estimates, each precisely deployed within the data infrastructure to maximize utility while maintaining ironclad security.

We've built our system from the ground up with security as our top priority. Our AI implementations are governed by strict guardrails, explaining the action and seeking user confirmation before executing any system changes. Regular third-party security audits and reviews ensure that we maintain the highest standards of data protection and system integrity.

What sets Valo apart is our ability to enrich data gathered from your Salesforce environment and beyond. Our AI doesn't just work within a single Salesforce org - it provides a comprehensive view across your entire systems landscape. This holistic approach allows us to:

Detect anomalies that might otherwise go unnoticed

Summarize crucial system nuances in easy-to-understand formats

Provide actionable insights that drive efficiency and productivity

Design holistic systems with shared policies across the environments

Comparing Valo solution with other AI automation tools:

As a Salesforce admin, you'll appreciate how our solution simplifies complex tasks, automates routine processes, and offers predictive recommendations to optimize your Salesforce environment. Our AI assistant communicates in clear, jargon-free language, making advanced system management accessible to Admins of all experience levels.

The Valo “Ask!”

By choosing Valo, you're not just getting an AI tool - you're partnering with a team that's at the forefront of secure, intelligent Salesforce management. We invite you to explore how our solution can transform your Salesforce experience.

Let's work together to create a more secure, efficient, and intelligent future for Salesforce management. With Valo, you're always a step ahead in your Salesforce journey, armed with cutting-edge AI technology and uncompromising security. We look forward to meeting you at Dreamforce and showing you the power of Valo's secure AI solution in person!